How to discover the S3 Bucket size

Mar 24, 2019

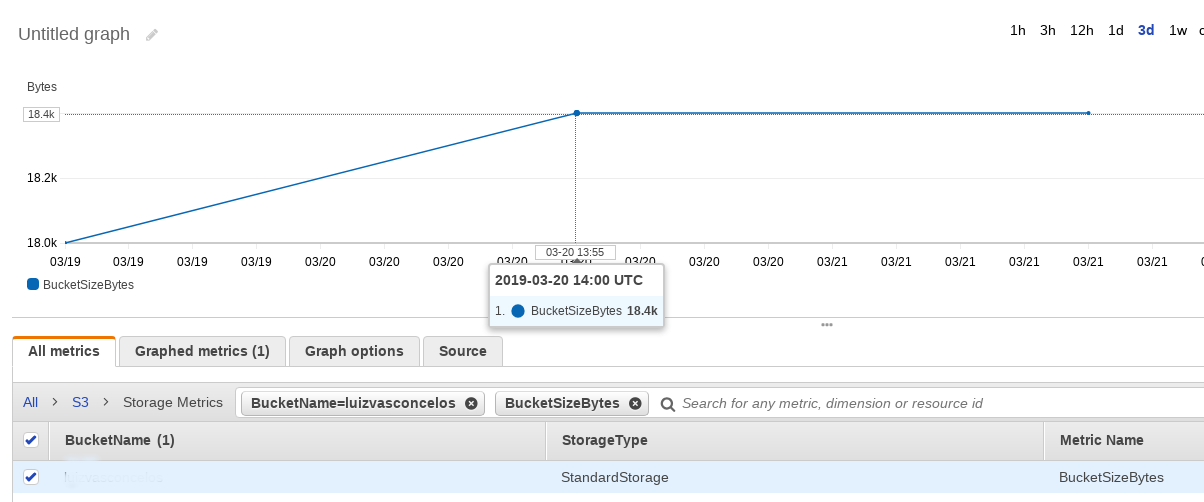

The S3 bucket is a great service with great features like versioning, bucket policies, encryption, etc. It is easy to use and as one of the charges is used space it’s a good idea to track how much space is being used, AWS provides the BucketSize through CloudWatch and we can easily see it from the Console: CloudWatch -> Metrics -> S3 -> Storage Metrics -> DesiredBucket

Note: These storage metrics are provided by AWS daily, so is necessary to adjust the period to see the metrics.

In this example we can see that the bucket is using 18.4k, but if we need to get this information programmatically? We can use awscli to fetch this data. Eg:

aws cloudwatch get-metric-statistics --namespace AWS/S3 --start-time "$(echo "$(date +%s) - 86400" | bc)" --end-time "$(date +%s)" --period 86400 \

--statistics Average --region us-east-1 --metric-name BucketSizeBytes --dimensions Name=BucketName,Value="LernentecBucket" Name=StorageType,Value=StandardStorage

Note: you need to adjust the bucket name, region and maybe the StorageType.

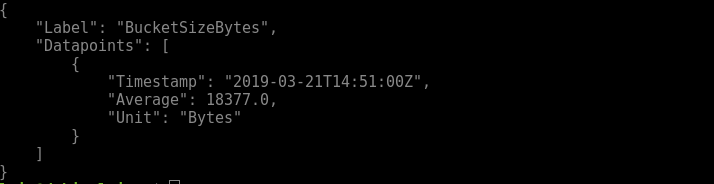

It will return the value in bytes:

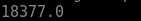

We can improve it to get only the size using the query option:

aws cloudwatch get-metric-statistics --namespace AWS/S3 --start-time "$(echo "$(date +%s) - 86400" | bc)" --end-time "$(date +%s)" --period 86400 --statistics Average \

--region us-east-1 --metric-name BucketSizeBytes --dimensions Name=BucketName,Value="LernentecBucket" Name=StorageType,Value=StandardStorage --query 'Datapoints[*].Average' --output text

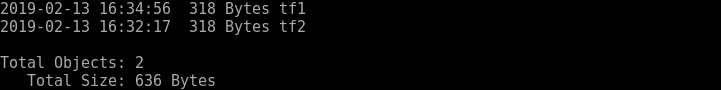

There is another option using the aws s3 command line to read each object on the bucket and then provide information about the size, this is not much performatic but is a good option when we need to get the size of a specific path.

aws s3 ls --summarize --human-readable --recursive s3://LernentecBucket/tf

Source: https://docs.aws.amazon.com/AmazonS3/latest/dev/cloudwatch-monitoring.html https://docs.aws.amazon.com/cli/latest/reference/cloudwatch/get-metric-statistics.html https://docs.aws.amazon.com/cli/latest/reference/s3/ls.html